Comments

Adventurous_Class_22 t1_j8w6m6o wrote

Means I can do my own midjourney for $5, but only for a single image? 🤔

Miguel7501 t1_j8w97h8 wrote

Is that the same model every time?

Miguel7501 t1_j8w9ckm wrote

No, this is classification. You can train an AI that's pretty good at identifying images for 5$.

kingchongo t1_j8wbrul wrote

Adjust for inflation and AI can afford a carton of eggs

[deleted] t1_j8wg9bf wrote

[removed]

viridiformica t1_j8wianb wrote

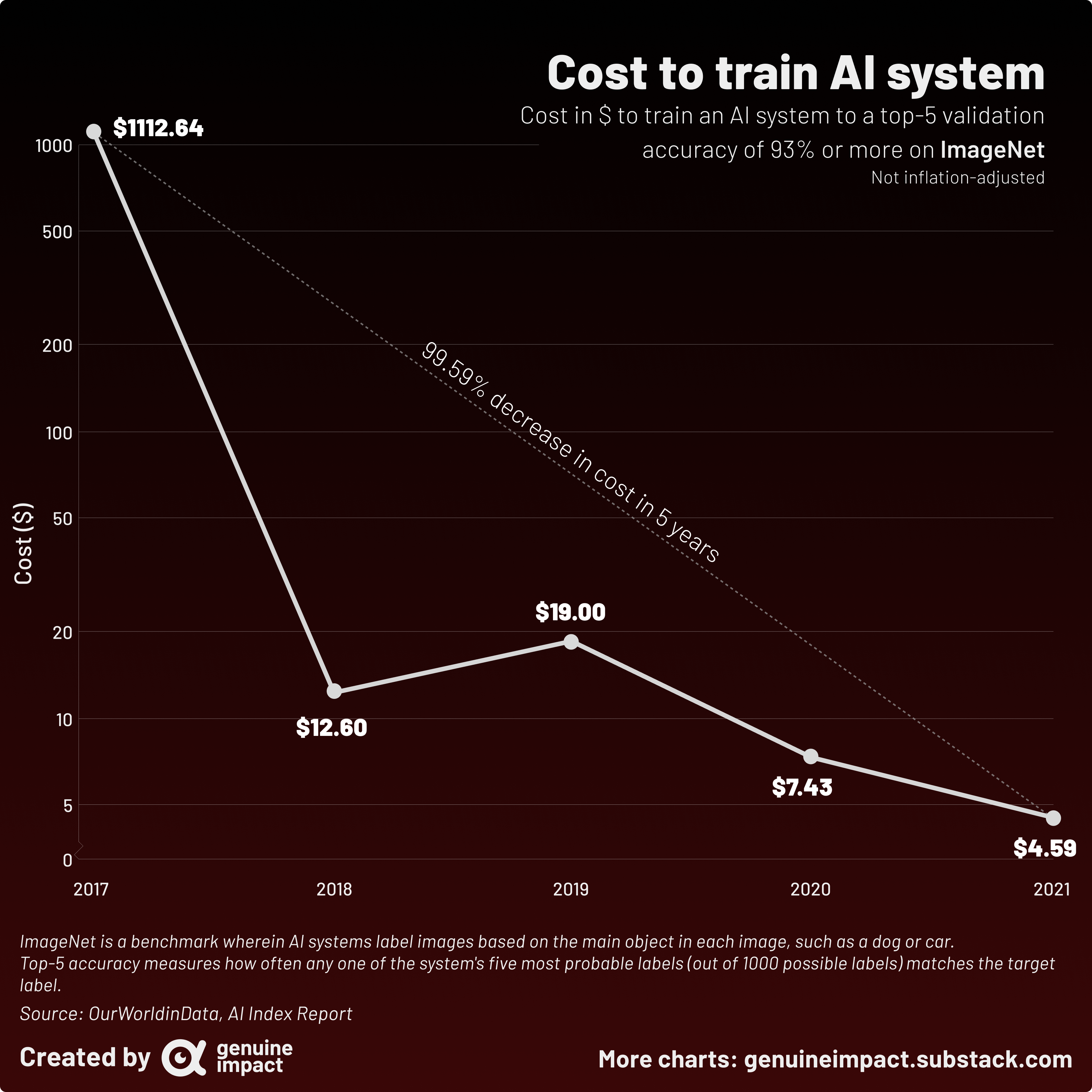

Man this is terrible. The log scale completely distorts the trend - essentially costs fell by a factor of 100 between 2017 and 2018, and the rest is trivial

[deleted] t1_j8wih36 wrote

[removed]

mnimatt t1_j8wkzz1 wrote

It only fell from $12 to $5 over 3 years. The real interesting thing here is pretty obviously 2017 to 2018

mviz1 t1_j8wlj5z wrote

Still a 50%+ decline over 3 years. Pretty good imo.

[deleted] t1_j8wlkgu wrote

[removed]

Net-Specialist t1_j8wlpsp wrote

What drove the 2017 to 2018 cost reduction?

[deleted] t1_j8wlume wrote

[deleted]

Dykam t1_j8wlxvx wrote

Dykam t1_j8wm1ju wrote

There's some sense to having the second trend (2018-2021) visible, but it should be on a different graph, the two data pieces are not really compatible.

workingatbeingbetter t1_j8wmdi9 wrote

As someone who does market valuations and sets the prices for some of the most popular AI datasets, this is very misleading if not wholly inaccurate. In any case, I’m gonna look into these cited sources to see how they even came up with these numbers.

patrick66 t1_j8wmpxh wrote

No it’s a benchmark that any image classification model can use to test accuracy. There would still be pretty massive improvement on the graph if it were all the same model, GPU efficiency for deep learning has skyrocketed since 2017, but a lot of the graph is also just modern models being quicker to train

[deleted] t1_j8wmq9d wrote

[removed]

QuirkyAverageJoe t1_j8wn67m wrote

What's this scale on the vertical axis? Ewww.

Utoko t1_j8wo1zf wrote

or leave it at log scale and it is completely fine. It is labeled where is the issue?

We can make 3 post about it split it up zoom in and out or just use log scale...

[deleted] t1_j8wobmf wrote

[removed]

Psyese t1_j8woyhg wrote

So to what is this improvement attributed to? To hardware or to better AI systems designs?

Dykam t1_j8wpt3m wrote

Because the image actually makes a separate point of saying "99.59 % in 5 years", which isn't all that interesting as it's almost the same %, 98.92% in just the first year. In a way, it's presenting the data in a way making it less impressive than it is.

This is /r/dataisbeautiful, not r/whateverlooksnice, so critiquing data presentation seems appropriate.

NetaGator t1_j8wqyhx wrote

And a shitty one too... Same as their veiled ad yesterday, irrelevant data set and infographic masquarading as data...

Qbek-san t1_j8wsugw wrote

logarithmic decline

taleofbenji t1_j8wt0ui wrote

Yup, and picking the most trendy topic possible, while ironically revealing they know fuck all about it!

polanas2003 t1_j8wvpq1 wrote

Well it is a trade-off of either seeing the big drop and nothing else or seeing the big drop and due to the log level graph also seeing the further advancements clearly.

That's what I was always taught to do to provide a better glimpse of data in statistics and econometrics classes.

Maciek300 t1_j8wx19l wrote

Not 75%. It only looks like that. It's actually 98.2% in two years. That's exactly why the post distorts the data.

viridiformica t1_j8wx27r wrote

If this were in a scientific publication where seeing the actual numbers in each year was important, I might agree. But this is a data visualisation purporting to show the trend in costs over 5 years, and it is failing to show the main trend clearly. It's the difference between 'showing the data' and 'showing the story'

[deleted] t1_j8wxp4i wrote

rainliege t1_j8x0xyj wrote

How did it get more expensive in 2019?

Utoko t1_j8x123v wrote

but your suggestions to split it up in multiple graphs is far worse or only show data from 2017 and 2018.

Than everyone would wonder how ho wit developed after 2018.

You can make the same claims about every stock market chart which is displayed in log scale. "These movements don't matter because 96% of the growth was in the past."

but the recent development is very important too. In this case that it still continues to go.

It is still down 35% in the last year, which lets you see we are not even close to the end of the read.

One might argue the 98.92% decrease says a lot less because when something is not done in scale it is always at first extreme expensive. So I don't agree that they make it look less impressive than it is.

So as long as your point is people don't understand how to read log charts I still disagree with you.

Bewaretheicespiders t1_j8x3dz9 wrote

"to a top 5 accuracy of 93% or more".

So they take the model cheapest to train that can reach that top-5 label accuracy.

[deleted] t1_j8x4f37 wrote

[deleted]

Early_Lab9079 t1_j8x4rhr wrote

What does this mean to me as a consumer of nerds?

Bewaretheicespiders t1_j8x518f wrote

I changed my mind, and I will guess mobileNetV2.

Niekio t1_j8x8e6n wrote

Looks like my crypto investment 😂😂😂

earthlingkevin t1_j8x9jgh wrote

At high level our models didn't get much better (there are improvements ofcourse). The biggest change is that instead of training on a small data set, companies started throwing everything on the internet at it.

Psyese t1_j8xbydd wrote

So basically investment - more electricity, more expenditure.

[deleted] t1_j8xd141 wrote

[deleted]

Maciek300 t1_j8xe0vw wrote

Yeah ok it's 75% from 2019 to 2021 but compared to the absolute difference between 2017 and 2018 it's nothing.

xsvfan t1_j8xf392 wrote

> Progress in AI is being driven by availability of large volume of structured data, algorithmic innovations,and compute capabilities. For example, the time to train object detection task like ImageNet to over 90% accuracy has reduced from over 10 hours to few seconds, and the cost declined fromover $2,000 to substantially less than $10 within the span of the last three years Perrault et al.(2019)

kursdragon2 t1_j8xfq1r wrote

How can you say making something 75% cheaper in 2 years is nothing lmfao? What the fuck are you on. Of course once you get to a certain point the absolute numbers are going to look small but that's still huge improvements.

[deleted] t1_j8xfr75 wrote

[deleted]

earthlingkevin t1_j8xgykb wrote

Basically. More money to gcp or azure.

ilc15 t1_j8xh1p8 wrote

I would guess better architecture for both models, hardware and frameworks. While tensorflow, pytorch and resnet are all from mid 2015/2016 i would guess it could take a year to fully integrate (be it improvements in the framework, or industries adopting them). Tensorflow and Pytorch are very popular ML packages, and resnet is an architecture which I thought is more data efficient than it's predecessors.

As for the hardware i dont know enough about their releases the same goes for updates in the cuda framework which improves gpu acceleration.

The_Gristle t1_j8xjf5z wrote

AI will replace most call centers in the next 10 years

space_D_BRE t1_j8xjrlx wrote

So forget taking jobs it's gonna go for the kill shot and starve us out first. Greatttt🙄

Bewaretheicespiders t1_j8xjz7f wrote

Resnet was far more efficient than vgg, but its also from 2016.

In the "efficiency first' route there's been resNet, then MobileNet, then many versions of EfficientNet.

wallstreet_vagabond2 t1_j8xofqo wrote

Could the rise in open source computing power also be a factor?

MrJake2137 t1_j8xr98m wrote

Electricity prices maybe?

ValyrianJedi t1_j8xv46o wrote

Eh, with bitcoin at least its back to being up 50% from where it was just a month or two ago... I've been riding that one a good long while. Have been buying $10 a day for like 3 years and bought a solid chunk like 2 years before that, so have been on the roller coaster for like 5 or so... Definitely a good few times that my stomach has dropped, but it's always worked itself out

Niekio t1_j8xvco0 wrote

Yeah that’s true if you didn’t traded with leverage and didn’t invest in sh*t coins 🥲

epicaglet t1_j8xx44m wrote

I think the price of bitcoin was climbing a lot then. So might be related to GPU prices?

[deleted] t1_j8xy1gh wrote

[deleted]

Dopple__ganger t1_j8xym9s wrote

Once the AIs unionize the cost is gonna go up dramatically.

[deleted] t1_j8xzbyv wrote

[removed]

splerdu t1_j8y0vw4 wrote

The most obvious thing to me is the introduction of Nvidia's RTX series cards.

PM_ME_WITTY_USERNAME t1_j8yb4oo wrote

What is that open source computing power you speak of?

[deleted] t1_j8ybpio wrote

[removed]

Nekrosiz t1_j8ycmqp wrote

Think he means cloud based computing power?

As in rather have 1 PC here and one pc there doing the work individually, linking them up and sharing the load

oozinator1 t1_j8ygin6 wrote

What happened in 2018-2019? Why price go up?

[deleted] t1_j8yihgv wrote

[removed]

BecomePnueman t1_j8yjagc wrote

Just wait till they graph 2022 and 2023 when inflation gets it.

flamingobumbum t1_j8yjvjq wrote

You 'consume' nerds? Huh..

[deleted] t1_j8ykv72 wrote

[removed]

HOnions t1_j8yl9sw wrote

I can tell you the price of ImageNet, it’s fucking free.

This has nothing to do with dataset, but price, availability and performance of compute, and the efficiency of the newer models.

Training a VGG19 on a 1080 or a EfficientNet on a TPU isn’t the same.

HOnions t1_j8yll1y wrote

Nah. But you can download stable diffusion (what midjourney is based on), and run it on your machine for free (-electricity)

FartingBob t1_j8yn9ap wrote

2019 was a lull for bitcoin, graphics cards were plentiful and relatively cheap. There was the first big spike in late 2017 / early 2018 then it dropped until late 2020 and went crazy in 2021.

Prestigious-Rip-6767 t1_j8yq0h4 wrote

you don't like aws

Gone247365 t1_j8yqdbl wrote

You are OnlyFans?

greenking2000 t1_j8yr0yq wrote

You can donate your idle CPU time to do research if you want

You install a program and it when your CPU/GPU usage is low it’ll do calculations for that organisation

https://en.m.wikipedia.org/wiki/List_of_volunteer_computing_projects

Though he is likely on about Cloud Computing and got the name wrong

johnyjohnybootyboi t1_j8yw9k8 wrote

I don't like the uneven scale of the y-axis, it doesn't show the full proportion of the decline

Emotional_Squash9071 t1_j8ywo08 wrote

If something cost a billion $ and you brought the price down to a million $, I’m pretty sure you’d think dropping it from a million to 250k is pretty great too.

OrangeFire2001 t1_j8yzc01 wrote

But is this assuming it’s stealing art from the internet, or is it being paid for?

[deleted] t1_j8z2cni wrote

[deleted]

XkF21WNJ t1_j8z2jyf wrote

It's not even a log scale, there's a 0 on there. The scale is all over the place.

viperex t1_j8z40by wrote

This is what Cathy Wood keeps saying. This is why she's investing heavily in these new fields

batua78 t1_j8z5mwr wrote

uuhm well it depends. You want to keep up-to-date and newer models take tons more resources.

willstr1 t1_j8z5qlv wrote

They are just a fan of tangy candy

OTA-J t1_j8z8pxs wrote

Should be plotted in energy consumption

PM_ME_WITTY_USERNAME t1_j8zc52q wrote

I used Folding@Home before AI took over! :>

foodhype t1_j8zci3y wrote

Deep learning techniques improved dramatically in 2017. TPUs were also first introduced in 2016, and it typically takes about 4 years for data centers to completely migrate.

Coreadrin t1_j8zhbpd wrote

what being a low regulation market does to a mfer.

awkardandsnow111 t1_j8zp8du wrote

Yes. I like em twink with glasses.

bitcoins t1_j8zrc48 wrote

bitcoins t1_j8zref9 wrote

Just BTC and hold as long as possible

NerdyToc t1_j8zsi8c wrote

I think the interesting thing is that it rose from 12 to 19.

[deleted] t1_j8zxzc7 wrote

[deleted]

eschatos_ t1_j8zyh4p wrote

But cheese is three times more. Damn.

OkSoBasicallyPeach t1_j8zz9ty wrote

exponential scale misleading here i feel like

hppmoep t1_j90acma wrote

I have a theory that modern games are doing this.

ChronWeasely t1_j90ajzx wrote

The heck is with the scaling on the y axis? Fix that wackness. Looks out of proportion and obviously adjusted to fit a narrative.

It's not even consistent. Big, then small physical spaces, then jumping my random amounts as well.

ChronWeasely t1_j90b0of wrote

The "trend line" with the attached conclusion is what makes it egregious and masks the logarithmic nature of the y axis. Like it misses the important points with overfitting.

And the interesting thing is two things

- in one year, prices fell by 99%

- in subsequent years, prices have fallen another 60%

But it makes it look like there is a continuity that in reality doesnt fit a trend line at all as is seen in the non-logaritmic version

Whiterabbit-- t1_j90bp8z wrote

the further advancements are irreverent. of the 4 dat a points one goes backwards, and the cost only decreased by 50% compared to the drastic cost the year before.

[deleted] t1_j90cvy8 wrote

[removed]

Sainagh t1_j90fbbm wrote

Wtf is that y axis?!? Like why not nog scale, or literally any labelling that makes mathematical sense?

"Oh yeah let's put the bars here here and here, looks better that way"

[deleted] t1_j90q725 wrote

[removed]

PsychologicalSet8678 t1_j90us3l wrote

I think it's also about Transfer learning. Many are using fixed backbones.

PsychologicalSet8678 t1_j90uxhj wrote

If anything, the carbon footprint of training AI models have become bigger and bigger each passing year. The large LLMs are trained with clusters so big, that only the very very top tier tech companies can afford.

Kiernian t1_j90v2er wrote

and the only one with a decent fricking screen saver option.

Seriously, the rest of the @Home gang needs to make with the fast fourier transforms or something equally eye-candy-ish to watch.

fREAKNECk716 t1_j91713d wrote

There is actually no such thing as AI. (...in the form of AI that would typically be imagined from decades of books, TV and movies.)

No matter what, at this time, it always boils down to computer program that has pre-programmed responses from pre-determined stimuli.

LazerWolfe53 t1_j91cksk wrote

This is the actual metric that should scare people about AI

peter303_ t1_j92q82b wrote

Special purpose CPUs that perform lower precision calculations that are fine for neural nets. You need 64 bit floating point for weather prediction, but 8 bit integer works OK for some neural calculations. The previous CPUs could downshift to smaller numbers, but were not proportionally faster. The new ones are. NVIDIA, Google, Apple have special neural chips.

crimeo t1_j933gfk wrote

New prize for the most ridiculously misleading visualization I've seen on the sub so far.

Literally just a 5 point line graph, and you still managed to absolutely butcher it by using Willy Wonka's Wacky Y Axis where there are no rules and the numbers don't matter.

And boring even if was done correctly.

And ugly.

crimeo t1_j933xqe wrote

Your statement is wrong and has been for decades. It can definitely respond to brand new stimuli it's never seen before. You seriously think the ChatGPT guys "preprogrammed" the answer to "Give me the recipe for a cake but in Shakespearean iambic pentameter"? Lol? There are also tons of AI systems that for example take any painting you give them and make it look like Van Gogh. The programmers never saw your painting before...

If you want to argue subjectively about the term intelligence, fine, but "preprogrammed responses" as well as "predetermined stimuli" are both objectively wildly incorrect.

crimeo t1_j934f0m wrote

Lol yep, turns out just stealing shit is really cheap. WHO KNEW!

"Price of a candy bar before vs after I started just walking out of the store with them. 100% decrease! Massive efficiency gain!"

crimeo t1_j934utq wrote

Infoation adjusted wages are slightly HIGHER than they were before COVID. As in it is in fact easier to afford the cost of living than before. Wages don't just sit around static either.

The core problem is only if you have a lot of cash savings or if you are a creditor

EmbarrassedHelp t1_j93xd3i wrote

It still costs time and money to label your own dataset. This chart appears to be using an older pre-made dataset that's rather limited compared to more recent text to image datasets like LAION.

EmbarrassedHelp t1_j93xuij wrote

It should excite people that the technology is still very much democratic in terms of costs, and not something only the 0.1% can afford.

earthlingkevin t1_j94ynbt wrote

Aws is not really the best for ML related things

fREAKNECk716 t1_j95kt5v wrote

No, they preprogrammed how to break down that sentence into individual parts and process each one and how they relate to each other.

What you have seemed to miss, is the part of my statement in parenthesis.

crimeo t1_j965i0d wrote

> No, they preprogrammed how to break down that sentence into individual parts and process each one and how they relate to each other.

If you meant "processing", why did you say "stimuli and responses", neither of which is processing?

Regardless, also no, they almost certainly didn't train it grammar either. Similarly to how you don't teach your 2 year old child sentence diagramming, in most AI like this, it picks it up from examples, not explicit rules.

They did program in basic fundamentals of learning and conditioning though. Much like your human genes programmed into you, since newborn infants already demonstrate reinforcement learning...

lafuntimes1 t1_j987w45 wrote

Anyone have the actual report on this to link? The ‘source’ is not easy to find. I very much doubt there was a 100x improvement in AI train time in a single year without a major major caveat. I’m going to guess that there was some major improvement in the imagenet algorithm itself OR people learned how to train AI on GPUs (which I swear was done much earlier than 2018).

docsms500 t1_j9gwkma wrote

What type of scale is that?

giteam OP t1_j8vze1h wrote

Source

Newsletter

Tools: Figma, Tableau

*edit: title should say 5 years