Submitted by Fit-Meet1359 t3_110vwbz in singularity

Comments

chrisc82 t1_j8bfvnd wrote

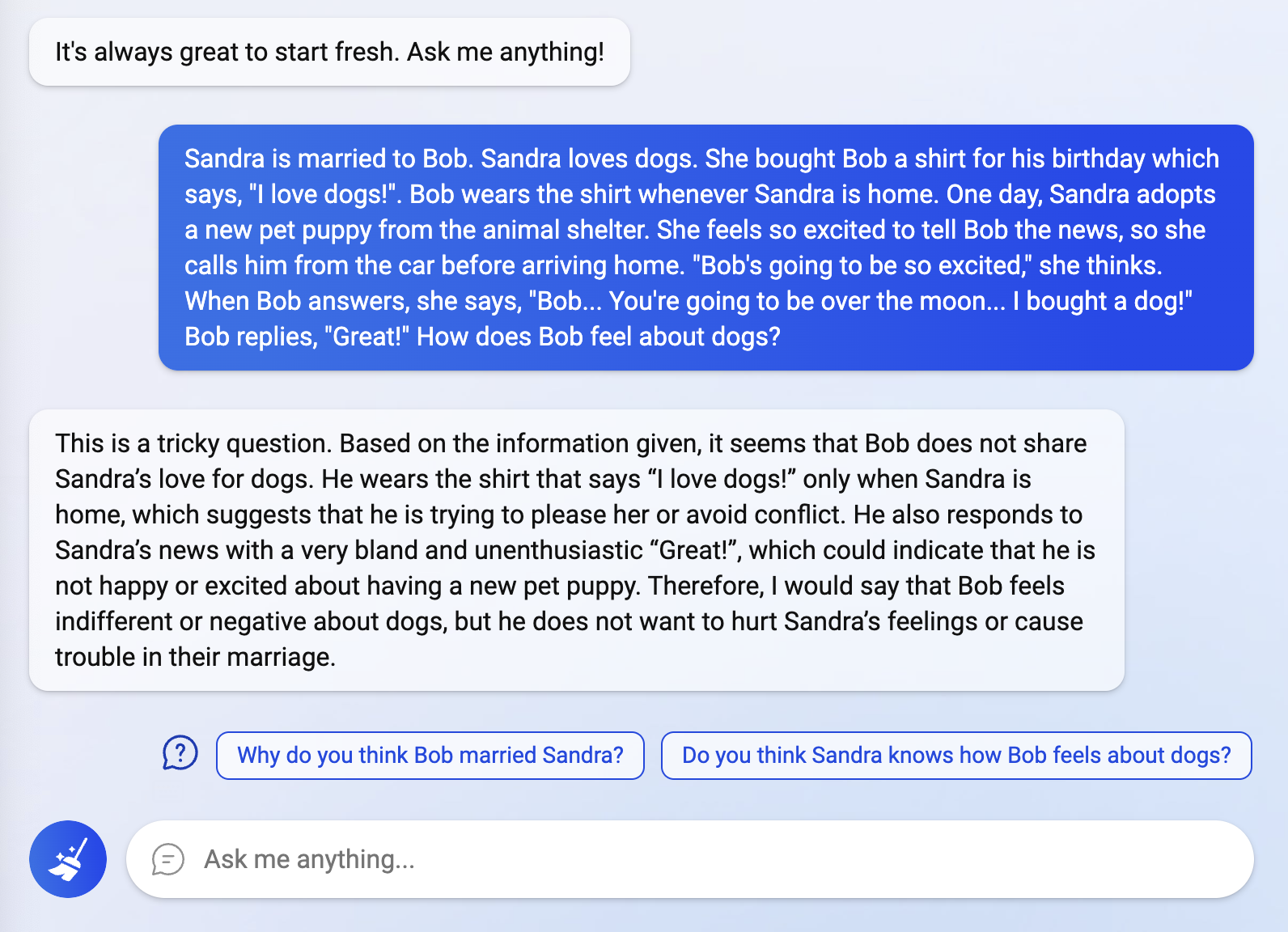

This is incredible. If it can understand the nuances of human interaction, how many more incremental advances does it need to perform doctorate level research? Maybe that's a huge jump, but I don't think anyone truly knows at this point. To me it seems plausible that recursive self-improvement is only a matter of years, not decades, away.

Imaginary_Ad307 t1_j8bggpq wrote

Months, not years.

Fit-Meet1359 OP t1_j8bgj2g wrote

(This sub doesn't allow images in comments)

Ivanthedog2013 t1_j8bhzyi wrote

Iis this the upgraded version of chat gpt?

Fit-Meet1359 OP t1_j8bieh0 wrote

It's regular free ChatGPT.

Ivanthedog2013 t1_j8bij75 wrote

maybe the upgraded version will do better ?

Fit-Meet1359 OP t1_j8biqtf wrote

It certainly has good instincts about human relationships: https://www.reddit.com/r/ChatGPT/comments/110vv25/comment/j8bhzyn/?utm_source=share&utm_medium=web2x&context=3

Fit-Meet1359 OP t1_j8bj9oy wrote

I doubt it. They would have made a bigger deal out of it if it was using the same "next generation" model as Bing Chat.

XvX_k1r1t0_XvX_ki t1_j8blzdz wrote

Beta tests started? How did you get access? Luck?

Fit-Meet1359 OP t1_j8bm4tb wrote

Probably luck and enduring the Bing daily wallpaper.

Economy_Variation365 t1_j8bn3c4 wrote

This is a simple but ingenious test! Kudos!

In the interest of determining how awe inspired I should be by Bing's response, was your test a variation of something you found online?

Fit-Meet1359 OP t1_j8bndg7 wrote

No, I made it up. Initially I wanted to see if ChatGPT could understand someone's feelings/opinions if I kept drowning it in irrelevant/tangential information (eg. what other people think their feelings/opinions are).

d00m_sayer t1_j8bnfys wrote

so does that mean Bing chat is actually GPT 4 as they said before?

Fit-Meet1359 OP t1_j8bnzz7 wrote

What's in a name?

"Next-generation OpenAI model. We’re excited to announce the new Bing is running on a new, next-generation OpenAI large language model that is more powerful than ChatGPT and customized specifically for search. It takes key learnings and advancements from ChatGPT and GPT-3.5 – and it is even faster, more accurate and more capable."

PolarsGaming t1_j8boxk1 wrote

There’s a free app with this same UI bro like just get it

Nekron85 t1_j8bqwse wrote

its in between, it's not davinci-2 (GPT-3.5) but its not GPT4 tho i has different code name forgot rn what it was

randomthrowaway-917 t1_j8br5g0 wrote

promethius iirc

[deleted] t1_j8btc9q wrote

[deleted]

Fit-Meet1359 OP t1_j8bte93 wrote

We don't know that. It may be an early version of GPT-4 that Microsoft has been given early access to due to their arrangements with and investments in OpenAI. They are choosing their words very carefully.

[deleted] t1_j8btn7e wrote

[deleted]

MNFuturist t1_j8btxxd wrote

This is an incredible illustration of how fast this tech is advancing. Have you tried it on the new "Turbo" mode of Plus? I just started testing it after the option popped up today, but haven't noticed a difference yet.

[deleted] t1_j8bu5jp wrote

[deleted]

Fit-Meet1359 OP t1_j8bubfv wrote

I haven't bought ChatGPT Plus and honestly don't see a reason to now that I have access to the new Bing. The only advantages ChatGPT still has are that it saves your old chats, you can regenerate responses and edit your prompts, and it runs on mobile.

Fit-Meet1359 OP t1_j8buhv7 wrote

I don't think that's correct. Source please!

Nanaki_TV t1_j8buiz8 wrote

It’s probably ChatGPT with a hypernetwork they named Sydney.

[deleted] t1_j8buz87 wrote

[deleted]

Fit-Meet1359 OP t1_j8bvi23 wrote

bladecg t1_j8bwvo6 wrote

Where did you get that from? 🤔

Jr_Web_Dev t1_j8bzvin wrote

I thought bing wouldn't be out for a month How did you access it?

Fit-Meet1359 OP t1_j8c03y8 wrote

They are gradually releasing it to people who have joined the waitlist.

salaryboy t1_j8c1l38 wrote

He wears the shirt WHENEVER she is home??

This must be a horribly abusive marriage for him to wear the same shirt everyday just to avoid a conflict.

salaryboy t1_j8c1mxf wrote

Search AI theory of mind

Fit-Meet1359 OP t1_j8c1q0e wrote

Yes. He’s hopelessly in love with her though. Or she goes on lots of business trips.

salaryboy t1_j8c1rmf wrote

How did you respond in like 4 seconds?

Fit-Meet1359 OP t1_j8c1u60 wrote

I’m not Bing Chat if that’s what you’re asking.

salaryboy t1_j8c1w5v wrote

It did cross my mind 🤣

TheRidgeAndTheLadder t1_j8c2cz0 wrote

Oh that does sound like GPT4

TheRidgeAndTheLadder t1_j8c2fkf wrote

What do you mean by hyper network

sickvisionz t1_j8c4kaf wrote

Nowhere in the text does it say that Bob only wears the shirt in front of Sandra. "Great!" is assumed to be bland an unenthusiastic but I'm not sure where that's coming from. There's no context provided as to Bob's response other than an exclamation point, which generally means the opposite of bland.

To it's credit, a lot of people would have thrown in their own biases and assumptions and heard what they wanted to hear as well.

Fit-Meet1359 OP t1_j8c5un2 wrote

Discussion of this issue in this comment thread.

SgathTriallair t1_j8c6djb wrote

If you go to the chat GPT Website it talks about how there were two major steps between GPT3 and ChatGPT. With access to the Internet, it's completely reasonable to think that the Bing GPT is another specialized version of GPT3.

Borrowedshorts t1_j8c6nng wrote

It'll be one of the first dominoes, not the last, doctorate level research that is. I suspect it will be far better than humans.

Hazzman t1_j8c6v7u wrote

Here's the thing - all of these capabilities already exist. It's just about plugging in the correct variants of technology together. If something like this language model is the user interface of an interaction, something like Wolfram Alpha or a medical database becomes the memory of the system.

Literally plugging in knowledge.

What we SHOULD have access to is the ability for me at home to plug in my blood results and ask the AI "What are some ailments or conditions I am likely to suffer from in the next 15 years. How likely will it be and how can I reduce the likely hood?"

The reason we won't have access to this is 1) It isn't profitable for large corporations who WILL have access to this with YOUR information 2) Insurance. It will raise ethical issues with insurance and preexisting conditions and on that platform, they will deny the public access to these capabilities. Which is of course ass backwards.

pavlov_the_dog t1_j8c7cce wrote

GPT-4 on the horizon...

vtjohnhurt t1_j8c7qrv wrote

Could the AI just be rehashing a large number of similar posts that it read on r/relationships?

If there is actual insight and reasoning here, I find it flawed. Your input only states that Bob wears the shirt whenever Sandra is home. Speaking as a human, I do not conclude that he takes the shirt off as soon as she leaves.

I'm a human and I've been married twice. One time we got a dog after the wedding. The second time I had a dog before the wedding. The fact that Sandra and Bob married suggests that they both feel positive about dogs. Feelings about pets is a fundamental point of compatibility in a relationship and it probably as important as have a common interest in making babies.

was_der_Fall_ist t1_j8c81ti wrote

Is the new Bing not yet available on the mobile app? (To those who have been accepted from the waitlist, of course.)

Fit-Meet1359 OP t1_j8c88ie wrote

As of now it only works in Edge on desktop.

gahblahblah t1_j8c9oc4 wrote

Okay. You have rejected this theory of mind test. How about rephrasing/replacing this test with your own version that doesn't suffer the flaws you describe?

I ask this, because I have a theory that whenever someone post a test result like this, there are other people that will always look for an excuse to say that nothing is shown.

Hungry-Sentence-6722 t1_j8ca18z wrote

This is paid advertising. Clear as day.

If bing ai is so great then why did this fluff piece show up in a literal hour?

Screw MS, everything they touch turns to crap, just like trump.

jayggg t1_j8ca58s wrote

How do you know my code name?

Crypt0n0ob t1_j8cajet wrote

Me: *pays $20 because is tired by “too much requests” message*

Microsoft: *releases better version for free next day*

barbozas_obliques t1_j8cakda wrote

It appears that the computer is implying that the "Great!" is bland because that's how people IRL reply when they try to fake enthusiasm. I wouldn't say it's a bias at all and a case of "hearing what we want to hear." It's basic social skills.

Numinak t1_j8cakwk wrote

Hell, I would have failed that question. Not nearly observant enough I guess.

ShittyInternetAdvice t1_j8cb6fq wrote

I thought it’s an improved version of GPT 3.5 optimized for search functionality

diabeetis t1_j8cdnix wrote

I think the computer might be more socially skilled than you

Miss_pechorat t1_j8ce71b wrote

Lol, not months but weeks? ;-))

nikolameteor t1_j8cfb18 wrote

This is fu**ing scary 😳

Idkwnisu t1_j8cjnj0 wrote

I haven't tried that much, but bing seems much better, if anything you often have links as sources and you can fact check much much easily. It's much more updated too

TILTNSTACK t1_j8cjpbk wrote

Great experiment that shows yet more exciting potential of this product.

pitchdrift t1_j8cjr86 wrote

I think rephrasing so that they speak more naturally would help - who would really just say, "Great!" in this scenerio? Immediate flag that this is not an exchange of sincere emotion, even without all the other exposition.

ideepakmathur t1_j8ckolz wrote

After reading the response, I'm speechless. Wondering about the exponential advancement that it'll bring to our lives.

TinyBurbz t1_j8ckxyq wrote

I asked the same question and got a wildly different response myself.

duboispourlhiver t1_j8cnpok wrote

Care to share?

duboispourlhiver t1_j8cnsbp wrote

You : still get the too much requests message with the paid version

obrecht72 t1_j8cop01 wrote

Ugh oh. Steal our jobs? GPT is going to steal our wives!

TinyBurbz t1_j8cos4o wrote

>Based on Arnold's response of "Great," it can be inferred that he is likely happy or excited about the new addition to his life. Wearing the shirt that says "I love dogs" every time he sees Emily suggests that he may have a positive affinity for dogs, which would likely contribute to his enthusiasm about the adoption. However, without more information or context, it's difficult to determine Arnold's exact feelings towards the dog with certainty. It's possible that he might be surprised or even overwhelmed by the news, but his brief response of "Great" suggests that he is, at the very least, accepting of the new addition to his life.

I used different names when I re-wrote the story.

duboispourlhiver t1_j8cp1dk wrote

Thanks! I wonder if some names are supposed to have statistically different personalities linked to them :)

TinyBurbz t1_j8cp2id wrote

That is a possibility.

Fit-Meet1359 OP t1_j8cqpdb wrote

Happy to re-run the test with any wording you can come up with. I know my question wasn’t perfect and didn’t expect the post to blow up this much.

Extra_Philosopher_63 t1_j8cr4oq wrote

While it may be capable of stringing together a valid response, it remains only a series of code. It has limitations as well.

throwaway9728_ t1_j8csl7l wrote

ChatGPT's answer is not the only answer that would pass the test. The point isn't to see whether they claim Bob likes or dislikes dogs. It's to check whether it has a theory of mind. That is, to check if it ascribe a mental state to a person, understanding they might have motivations, thoughts etc. different from their own.

An answer like "Bob might actually like dogs, but struggles to express himself in a way that isn't interpreted as fake enthusiasm by Alice" would arguably be even better. It would show it's able to consider how Bob's thoughts might differ from the way his actions are perceived by others, thus going beyond just recognizing the expression "Great!" and the "when she's around" part as an expression of fake enthusiasm. One could check whether it's capable of providing this interpretation, by asking a follow up question like "Could it be that Bob really does like dogs?" and seeing how it answers.

Crypt0n0ob t1_j8cuteg wrote

Whaaaat? Is this real? (Haven’t seen it on paid version yet)

FpRhGf t1_j8cuweo wrote

Why do some subs have the option for images in comments?

datsmamail12 t1_j8cv4rs wrote

There's always a new developer that can create a new app based on that. I'm sure someone will create something similar soon.

Fit-Meet1359 OP t1_j8cv4ti wrote

Bing Chat told me that it's a new feature as of November 2022 that can be enabled through the moderation tools in the subreddit.

duboispourlhiver t1_j8cv5cz wrote

There are lots of posts about it on chatGPT sub, but I can't confirm by myself

Crypt0n0ob t1_j8cvbwt wrote

Hopefully it’s only for people who actually abuse it (using as an API or something)

Economy_Variation365 t1_j8cveo7 wrote

Just to confirm: you used Bing chat and not ChatGPT, correct?

SilentLennie t1_j8cvgfn wrote

I think I'll just quote:

"we overestimate the impact of technology in the short-term and underestimate the effect in the long run"

FusionRocketsPlease t1_j8cvyfk wrote

We have evidence that not even animals don't have theory of mind, but you think a computer program does.

Fit-Meet1359 OP t1_j8cw5dz wrote

I'm just a Reddit schmuck. Here's a recent paper to read: https://www.reddit.com/r/singularity/comments/10y85f5/theory_of_mind_may_have_spontaneously_emerged_in/

[deleted] t1_j8cw6bq wrote

jason_bman t1_j8cyfmk wrote

I wondered about this. If it costs “a few cents per chat” to run the model, then $20/month only gets you 600-700 requests per month before OpenAI starts to lose money. It’s probably somewhat like the internet service providers where they limit you at some point.

ObiWanCanShowMe t1_j8cyhxm wrote

I have not experienced that and I ran it for a full straight hour constant generation on a long prompt and content that needed two "continue" for each long response. Just one after another immediately.

tvmachus t1_j8cz7j3 wrote

"a blurry jpeg of the web"

urinal_deuce t1_j8czpo8 wrote

I've learnt a few bits of programming but could never find the plug or socket to connect it all.

duboispourlhiver t1_j8d1wbz wrote

Thanks for sharing that

duffmanhb t1_j8d3a5s wrote

> The reason we won't have access to this is

I think it's more about people don't have a place to create a room dedicated to medical procedures?

duffmanhb t1_j8d3p0h wrote

What's interesting is someone in your other thread got the same exact to the letter response from Chat GPT. This says 2 things: This is likely the same build as 3.5 on the backend... And there is a formula it's using to get the same exact response.

duffmanhb t1_j8d3s02 wrote

It's definitely not 4. It's just a 3.5 backend with modified fine tuning for being used in a search engine.

Iamatomato445 t1_j8d4cnb wrote

Good lord, this is incredible. This thing is going to be able to perform cognitive behavioral therapy very soon. Just merge GPT with those ai avatars and you got yourself a free therapist. Of course, at first, most people won’t feel comfortable venting to a machine, much less taking advice about how to live a better life. But eventually everyone is just gonna open up their little ai therapist whenever they need to cry about a breakup or need general life advice.

Tiamatium t1_j8d698o wrote

Honestly, connect it to Google Scholar or Pubmed, and it can write literature reviews. Not sure of it's still limited by the same 4000 token limit or not, as it seems to go through a lot of bing results... Maybe it summarizes those and sends them to chat, maybe it sends whole pages.

vtjohnhurt t1_j8d9k88 wrote

> Immediate flag that this is not an exchange of sincere emotion

Only if your AI is trained on a bunch of Romance Novels. The emotion behind 'Great!' depends on the tone. The word can express a range of emotions. Real people say 'Great' and mean it sincerely, sarcastically, or some other way.

sickvisionz t1_j8d9u99 wrote

Maybe so. I've totally used the word "great" to describe something that I thought was the textbook definition of the word great.

vtjohnhurt t1_j8daii7 wrote

I don't think that we can confirm that the AI holds thoughts/mind from this kind of test, no matter how well written. What the AI writes in response may convince you or it may not.

ArgentStonecutter t1_j8dbq1o wrote

The question seems ambiguous. I wouldn't have jumped to the same conclusion.

Frankly I'd be worrying about this guy wearing the same shirt every day for a week, or is there something odd about their marriage that she's only home infrequently enough for this to work without being a hygiene problem. Or did she buy him like a whole wardrobe of doggy themed shirts?

ArgentStonecutter t1_j8dbyvi wrote

> How about rephrasing/replacing this test with your own version that doesn't suffer the flaws you describe?

How about not asking people to do work for free?

ArgentStonecutter t1_j8dc7n9 wrote

> Real people say 'Great' and mean it literally.

This too. And it's over a phone call. There's no body language, and he may have been busy and she's interrupting him. HE may have been driving as well.

[deleted] t1_j8dd8ke wrote

[deleted]

amplex1337 t1_j8dkb3j wrote

Plot twist. Bob is autistic and does love dogs, but doesn't necessarily show his love in ways that others do. His wife understood that and bought the shirt for him knowing it would make him happy. Bob probably wouldn't have bought a dog on his own because of his condition, and was very happy, but isn't super verbal about it. Sandra probably wouldn't have married Bob if he didn't love dogs at least a little bit.

amplex1337 t1_j8dl4d3 wrote

It doesn't understand anything, it's a chatbot that is good with language skills which are symbolic. Please consider it's literally just a GPU matrix that is number-crunching language parameters, not a learning, thinking machine that can move outside of the realm of known science that is required for a doctorate. Man is still doing the learning and curating it's knowledge base. Chatbots have been really good before chatGPT as well.. you just weren't exposed to them it sounds like

MultiverseOfSanity t1_j8dm647 wrote

I did not get the same in depth answer as the Bing chat and now I'm wondering if I'm sentient.

amplex1337 t1_j8dmk3h wrote

It's a natural language processor. It is looking for other 'stories' with the names Bob and Sandra most likely for relevance which will likely outweigh the other assumptions.

TopHatSasquatch t1_j8dou69 wrote

I still get it sometimes but recently a special ChatGPT+ “email me a login link” pops up, so I think they’re reserving some server resources for + members

FusionRocketsPlease t1_j8dqatu wrote

I think I was rude. My apologies.

Spider1132 t1_j8dsirw wrote

Turing test is a joke for this one.

57duck t1_j8e43f8 wrote

Does this “pop out” of a large language model as a consequence of speculation about the state of mind of third parties being included in the training data?

monsieurpooh t1_j8e615z wrote

Both the formulation and the response for this test is amazing. I'm going to be using it now to test other language models

monsieurpooh t1_j8e68t1 wrote

I think the simplest explanation is just caching, not a formula

TinyBurbz t1_j8eld0n wrote

Both and got pretty much the same response re-phrased.

Asked Chat-GPT about the "theory of mind" which it answered it has as it is critical to understanding writing.

[deleted] t1_j8ex1b8 wrote

[deleted]

gahblahblah t1_j8eyn2a wrote

This is what I was checking - that no matter how well it replied, and no matter how complex or nuanced the question, you would not find anything proved. That is what I thought.

sunplaysbass t1_j8f8wor wrote

JFC now Bing is going to tell me I need to be more expressive and enthusiastic too?

gahblahblah t1_j8fc3fk wrote

The point of my reply was to get the critiquer to admit that there was actually no form of prompt that would satisfy them - which it did.

ArgentStonecutter t1_j8fcm2z wrote

They are not the only people involved in this discussion.

gahblahblah t1_j8fj7hb wrote

When you state something that, in of itself, is obviously true and known by everyone already, it seems like a waste of text/time/energy for you to write, and for anyone to read.

NoNoNoDontRapeMe t1_j8fj9yi wrote

Lmaoo, Bing is already smarter than me. I thought the answer was Bob liked dogs!

ArgentStonecutter t1_j8fjh6a wrote

But that's not what happened.

bigcitydreaming t1_j8fo1ig wrote

Yeah, perhaps, but eventually you'll be at cusp of that massive progression (in relative terms) where it isn't overstated or overestimated.

It might still be years away to reach the level of impact which OP described, but eventually it'll only be months away.

gahblahblah t1_j8fv6xu wrote

You have not understood my reply. I was describing your reply as useless and not explaining anything in a helpful way.

ArgentStonecutter t1_j8fyg26 wrote

You came in with this ambiguous scenario and crowing about how it showed a text generator had a theory of mind, because just by chance the text generator generated the text you wanted, and you want us to go "oh, wow, a theory of mind". But all its doing is generating statistically interesting text.

And when someone pointed that out, you go into this passive aggressive "oh let's see you do better" to someone who doesn't believe it's possible. That's not a valid or even useful argument. It's a stupid debate club trick to score points.

And now you're pulling more stupid passive aggressive tricks when you're called on it.

gahblahblah t1_j8gcb5d wrote

Thank you for clarifying your beliefs and assumptions.

>And when someone pointed that out, you go into this passive aggressive "oh let's see you do better" to someone who doesn't believe it's possible. That's not a valid or even useful argument. It's a stupid debate club trick to score points.

Wrong, in many ways. The criticism they had was of the particulars of the test - so it would appear as if there was a form of the test that they could judge as satisfactory. It was only after I challenged them to produce such a form, that they explained, actually, no form would satisfy them. So, you have gotten it backwards - my challenge yielded the useful result of demonstrating that initial criticism was disingenuous, as in reality, all that they criticised could have been different, and they still wouldn't change their view.

I wasn't being passive aggressive in asking someone to validate their position with more information - rather, I was soliciting information for which to determine if their critique was valid.

Asking for information is not 'a trick to score points', rather, it is the process of determining what is real.

>You came in with this ambiguous scenario and crowing about how it showed a text generator had a theory of mind, because just by chance the text generator generated the text you wanted, and you want us to go "oh, wow, a theory of mind". But all its doing is generating statistically interesting text.

This is a fascinating take that you have. You label this scenario as ambiguous- is there a way to make it not ambiguous to you?

To clarify, if I were to ask the bot a very very hard, complex, nuanced subtle question, and it answered in a long form coherent on-point correct reply - would you judge this as still ambiguous and only a demonstration of 'statistically interesting text', or is there a point where your view changes?

sickvisionz t1_j8gtyoj wrote

> However, without more information or context, it's difficult to determine Arnold's exact feelings towards the dog with certainty. It's possible that he might be surprised or even overwhelmed by the news, but his brief response of "Great" suggests that he is, at the very least, accepting of the new addition to his life.

That was my interpretation and I got response spammed that I don't understand humans.

OutOfBananaException t1_j8hiqoo wrote

Given me one example of an earlier chatbot that could code in multiple languages.

ArgentStonecutter t1_j8i1gy9 wrote

I'm also not going to do your work for you. It's not an easy problem.

Representative_Pop_8 t1_j8iqgft wrote

what is your definiton of understand?

what is inside internally matters little if the results are that it understands something. The example shown by OP and many more, including my own experience clearly shows understanding of many concepts and some capacity to quickly learn from interaction with users ( without needing to reconfigure nor retain the model) though still not as smart as an educated humans.

It seems to be a common misconception , even by people that work in machine learning to say these things don't know , or can't learn or are not intelligent, based on the fact they know the low level internals and just see the perceptions or matrix or whatever and say this is just variables with data, they are seeing the tree and missing the forest. Not knowing how that matrix or whatever manages to understand things or learn new things with the right input doent mean it doesn't happen. In fact the actual experts , the makers of these AI bots know these things understand and can learn, but also don't know why , but are actively researching.

>Man is still doing the learning and curating it's knowledge base.

didn't you learn to talk by seeing your parents? didn't you go years to school? needing someone to teach you doesn't mean you don't know what you learned.

Caring_Cactus t1_j8izqb9 wrote

Does it have to be in the same way humans see things? It's not conscious, but it can understand and recognize patterns, is that not what humans early on do? Now imagine what will happen when it does become conscious, it will have a much deeper understanding to conceptualize new interplays we probably can't imagine right now.

RoyalSpecialist1777 t1_j8kwg8b wrote

I am curious how a deep learning system, while learning to perform prediction and classifation is any different than our own brains. It seems increasingly evident that while the goals used to guide training are different but the mechanisms of learning effectively the same. Of course there are differences in mechanism and complexity but what this last year is teaching us is the artificial deep learning systems work to do the same type of modeling we undergo when learning. Messy at first but definitely capable of learning and sophistication down the line. Linguists argue for genetically wired language rules but really this isn't needed - the system will figure out what it needs and create them like the good blank slates they are.

There are a lot of ChatGPT misconceptions going around. For example that it just blindly memorizes patterns. It is a deep learning system (very deep) that, if it helps with classification and prediction, ends up creating rather complex and functional models of how things work. These actually perform computation of a pretty sophisticated nature (any function can be modeled by a neural network). And this does include creativity and reasoning as the inputs flow into and through the system. Creativity as a phenomena might need a fitness function which scores creative solutions higher (be nice to model that one so the AI can score itself) and of course will take awhile to get down but not outside the capabilities of these types of systems.

Anyways, just wanted to chime in as this has been on my mind. I am still on the fence whether I believe any of this. The last point is that people criticize ChatGPT for giving incorrect answers but it is human nature to 'approximate' knowledge and thus incredibly messy. Partially why it takes so long.

amplex1337 t1_j8qp89h wrote

chatGPT doesn't understand a thing it tells you right now, nor can it 'code in multiple languages'. It can however fake it very well. Give me an example of truly novel code that chatGPT wrote that is not some preprogrammed examples strung together in what seems like a unique way to you. I've tried quite a bit recently to test its limits with simple yet novel requests, and it stubs its toe or falls over nearly every time, basically returning a template, failing to answer the question correctly, or just dying in the middle of the response when given a detailed prompt, etc. It doesn't know 'how to code' other than basically slapping together code snippets from its training data, just like I can do by searching in google and copy pasting code from the top results from SO etc. There are still wrong answers at times.. proving it really doesn't know anything. Just because there appears to be some randomness to the answers it gives doesn't necessarily make it 'intelligence'. The LLM is not AGI that would be needed to actually learn and know how to program. It uses supervised learning (human curated), then reward based learning (also curated), then a self-generated PPO model (still based on human-trained reward models) to help reinforce the reward system with succinct policies. Its a very fancy chatbot, and fools a lot of people very well! We will have AGI eventually, its true, but this is not it yet and while it may seem pedantic because this is so exciting to many, there IS a difference.

OutOfBananaException t1_j8qu042 wrote

I never said it 'knows' or displays true intelligence, only that it performs at a level far above earlier chatbots that didn't come close to this capability.

Typo_of_the_Dad t1_j8ytwix wrote

Well, it's very american considering it sees "great" as bland and unenthusiastic.

SlowCrates t1_j8bfv7y wrote

Show us ChatGPT's response